See in full: https://www.twitch.tv/magicleap

AR & VR hosted by Raw Haus at LittleStar

I am sharing my thoughts on Prototyping AR & VR and why it is essential and how it is a different system to design for.

G4C Brain Jam Presentations

Video presentations of four of the 8 experiences created during the Brian Jam. The Brain Jam is part of Games 4 Change at Parsons School of Design in New York City.

It merges neuroscientists with engineers and game developers to create applications and expeirences that connect with the brain.

"Mind Bending - a universal controller"

"Helping families with autism see eye to eye through augmented reality."

[Video not embedding - view on YouTube here]

"SenseScape - an immersive VR sensory processing challenge that is intended to aid diagnosis and monitoring of concussion"

Me, myself, and I

Recorded via mobile. Please forgive the gorilla arm.

Games 4 Change - XR 4 Change Day Highlights

Vijay Ravindiran of FloreoTech.com created lessons and simulations for autistic children to practice interfacing in challenging, real life situations like crossing the road or with police. Great insight into how user testing informs product decisions such as adding logic in the system for simulations to have more or less steps depending on the child’s actions. Very well-thought-out experience from the logic in interactions, to required fidelity of a simulation, to the multi-user experience (where the kid is in the headset and the teacher is managing the test on a tablet - each with an adapted interface into the simulation). VR is not only for empathy. It can impact people in a positive manner through practical (or magical) experiences.

A wonderful 360 film piece My Africa funded by the Tiffany Foundation. I want to say it was the best linear storytelling I have experienced immersively. Raised in Africa, I may be biased to this film.

Brain Jam builds - functioning prototypes that explored the interplay between the brain and virtual or augmented experiences. Like exploring the implications of changing the camera view from 1st person to 3rd person to omniscient (planar view) to explore the potential cognitive impacts. Demonstrated in a simulation of collaborating with industrial robots. The brain jam produced 8 different builds (or simulations) built in 48 hours. Some exploring neural input like using EEG data via headband to control simulated physics (Mind bending). Another used eye tracking to encourage autistic children to make eye contact (beyond the silly “social media” lenses we currently use - this is the definition of a “social lens” encouraging and fostering real social interaction).

Gaia Dempsy - cofounder of Daqari on a new hierarchy of needs. Revising Maslow’s hierarchy of needs (which was based on ethnographic studies of Blackfoot culture) based on new input form first nation tribal councils using xR to envision the future and pass down cultural knowledge.

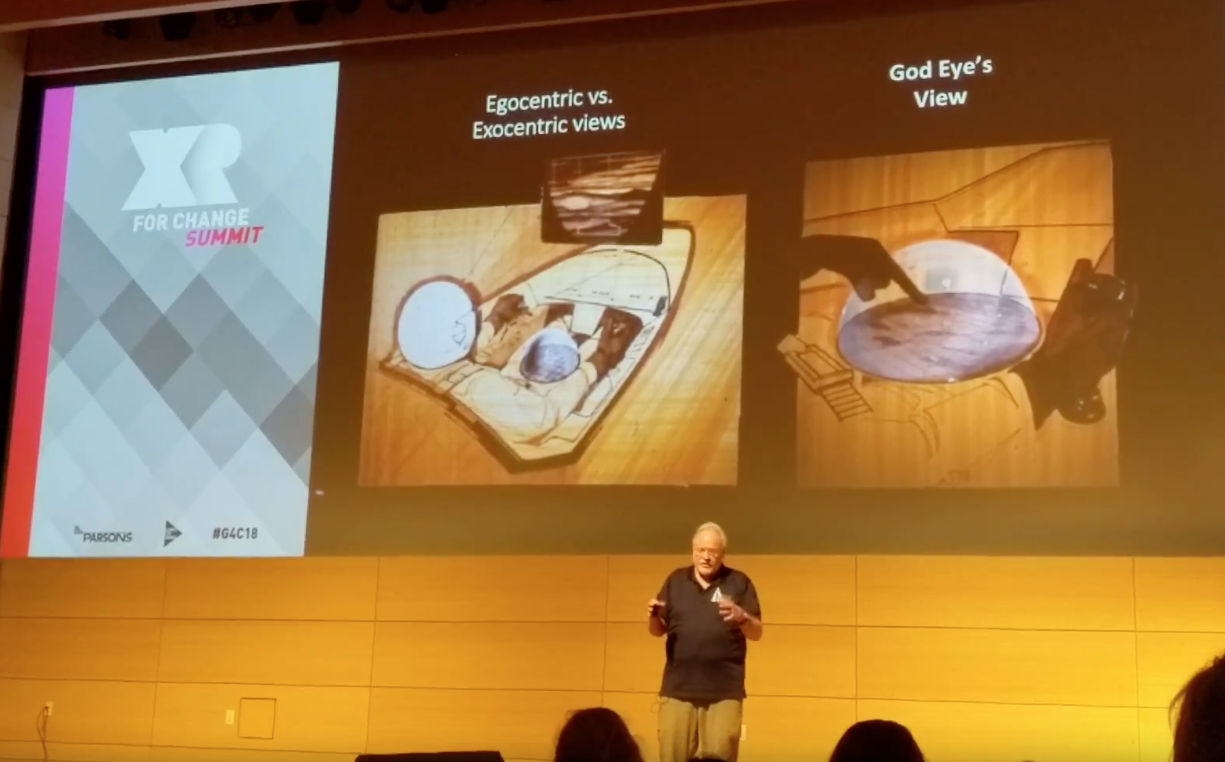

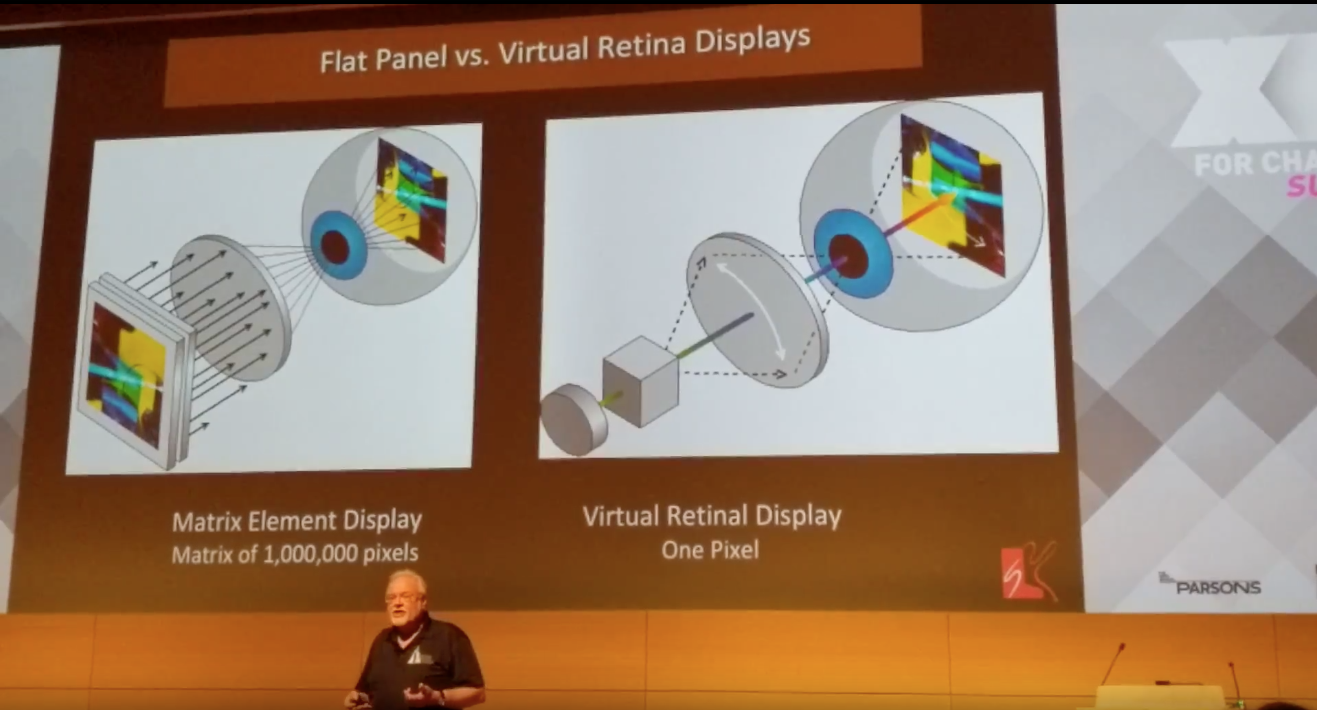

A more thorough origin of VR and guidelines for the future from Tom Furness who created developed cockpit systems and HUDs on HMDs. It gave pilots “the ability to do things that had never been done before.” Not because the display changed the physics of the aircraft, but because the it changed the perception of the aircraft and merged the pilot and the machine closer together. Spatial awareness (enemy location, your location and orientation) and state awareness (weapons systems, fuel, speed, etc.) merged into situational awareness that integrated into the pilot’s visual perception.

Private research from decades ago is still more advanced than what is on the market now. Which makes me wonder “What is being worked on now?”

Tom Furness of the Virtual World Society displaying visualization methods where pointing at the God's Eye View can highlight the same point in the first person view HUD.

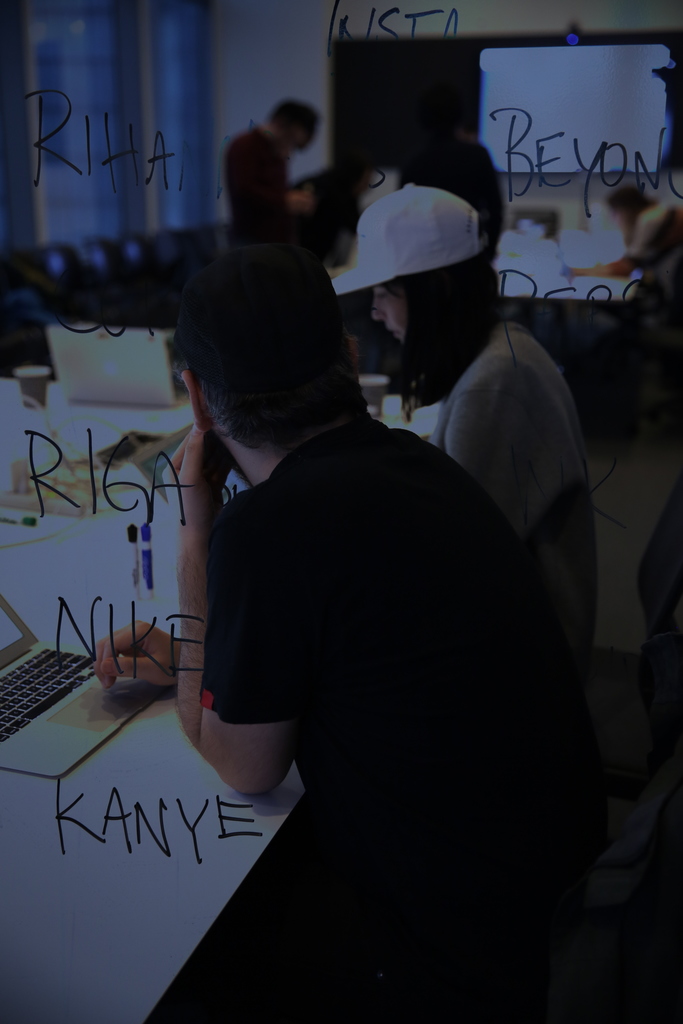

AI Rap Battle Bots

It is important to know how technology works if you are to design with or for that new technology. A hackathon is one way to explore new worlds.A hackathon is a context where it’s ok to break things without the expectation of creating any final output – but this hackathon had all of the ingredients for creatives to hack something new together.

Teaming up with R/GA’s Chatbot Authority Brad Jacobson and software engineer Sam Royston, we created a Rap Battle Bot. Training the Rap Battle Bot on thousands of lines of lyrics compiled across decades of rap allowed it to generate entirely new lines of poetry. Leveraging Reply.AI we created a chat interface on Facebook, so people can battle for title of best rapper, alive, dead, or digital. We ended the night with a live demo – a freestyle throwdown between R/GA's CTO and the Rap Battle Bot, leaving the crowd asking for an encore.

Team:

Sam Brewton - Rap Battle Experience Designer

Brad Jacobson - Strategist & Resident Chatbot Authority

Sam Royston - Machine Learning Engineer

Michael Hirsch - Data Scientist - Machine Learning

Photos by Annabel Ruddle

TBT November 2016

New at R/GA

I am very happy to share that two of my students from the Interaction Design class I teach at the School of Visual Arts in New York City are now summer apprentices with R/GA. Xin An is at R/GA Shanghai. Laney Lynn is at R/GA LA.

After teaching one session on how to prototype AR & VR with web technologies, they went to town and created the works below.

Maze by Xin An

A VR Experience based on dreams.

Gallery Vision by Laney Lynn.

A business card enhanced through AR connected to a larger way of making the Museum of Art and Design more interactive.

I believe this shows the value of being able to prototype for AR and VR. When working on the cutting edge in a space where there are not many examples of best practices, it is essential to be able to work in a form of that medium and not be bound by legacy tools that cannot convey the foundational methods of experiencing the medium.

So good job and welcome two new team members to R/GA.

Undergraduate Class: Interaction Design at SVA

Prototypes made in Ottifox.

Build Once Deploy Anywhere

is the dream that everyone is trying to achieve

Thinking in Pictures

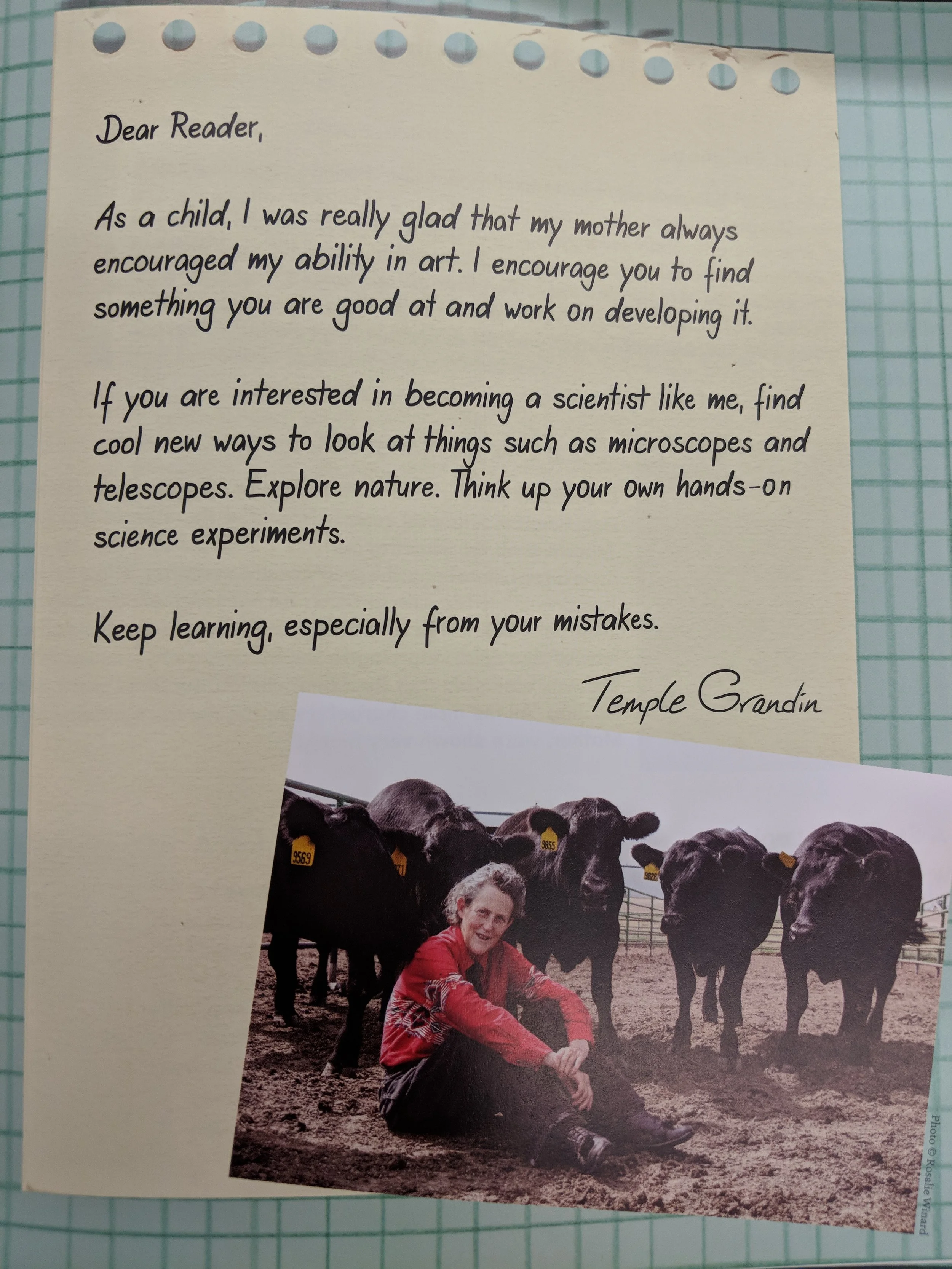

I ran across this book in the kid's table of the Strand Book Store.

It is about a girl who thinks in pictures.

Grandin outlines three different minds

1. "Photo Realistic Visual Thinkers

- Poor at algebra"

2. "Pattern Thinkers

- music and math"

3. "Verbal Mind

- poor at drawing."

These appear to be a select section of Gardner's Theory of Multiple Intelligences

Unique traits for minds that are closer towards Aspergers or autism:

- " The autistic mind tends to be a specialist mind"

- The trade off between cognitive thinking and social interaction.

Or strengths weighted to one of those areas.

- Sensory issue

- Strong ability to categorize

with an example of quicky being able to define problems as equipment problems or personelle problems.

- Fixation

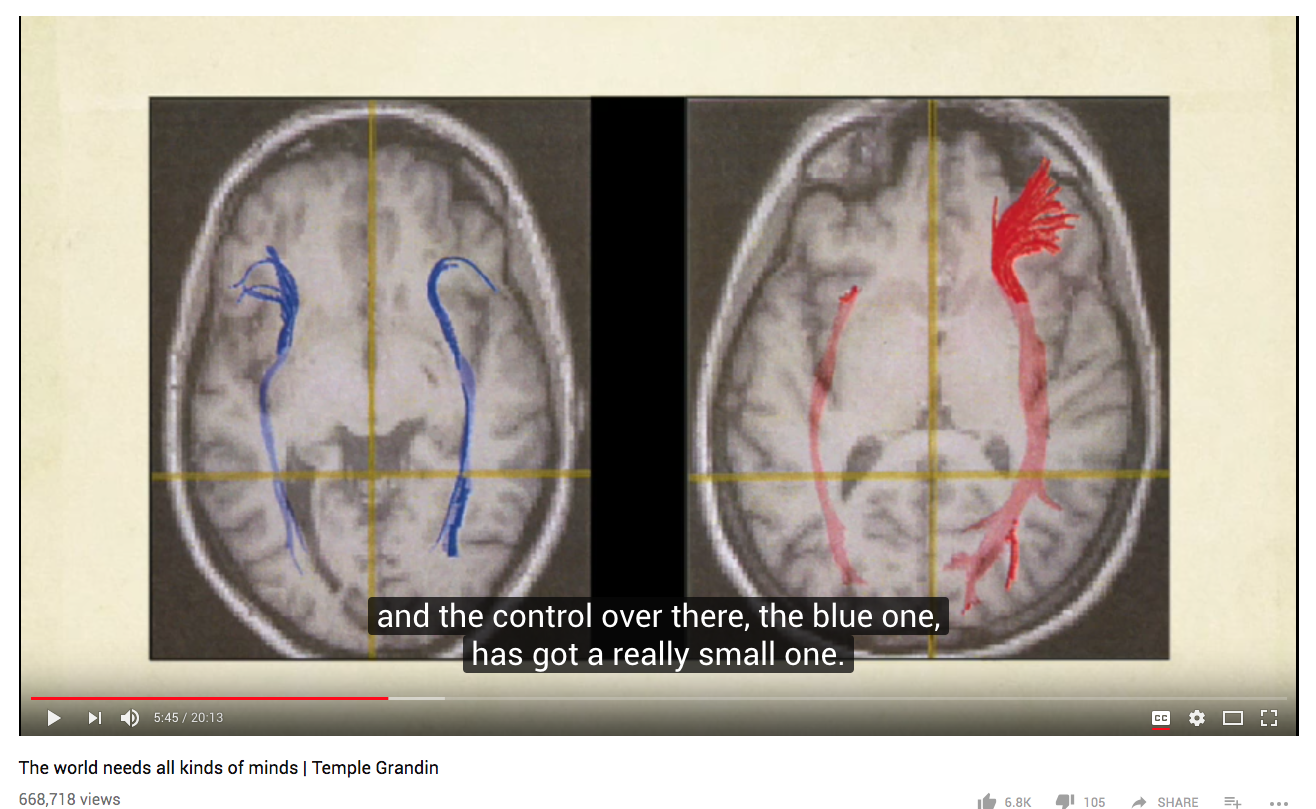

The primary visual cortex of a control brain (age, gender control) versus her brain.

““But one of the things that I was able to do in my design work is I could test-run a piece of equipment in my mind, just like a virtual reality computer system.””

Comparing her mind to a "VR computer system" is coincidental.

This was in 2010, before any consumer VR system of this millennium was even in dev kit.

But it is related to how we all think because we live in moving, volumetric system.

We think in imagery.

We test-run or simulate scenarios and processes in our mind.

We describe them visually for others to see.

Varifocal

The ability for the screen to move further from the user's eye means that it is healthier.

It allows users to naturally focus their eye in the device.

One of the drawbacks of current HMD systems is that if a virtual object appears far or close, your eyes are always focused at the same rate.

Even when you use a laptop or mobile phone for extended periods of time, you should take a break to look out of the window and focus on something 20 meters away to exercise your visual focus.

Three differences in 3D

There are three fundamental differences when creating for AR & VR.

These are differences that both designers and engineers encounter when developing 3D experiences.