Designing and Prototyping Augmented Reality

How to understand how to design for AR? Prototyping multi-scene augmented reality.

Understanding, designing, and prototyping, augmented reality.

There was the age of personal computing, the dot com era, internet when we realized we could connect people to each other, and more recently interpersonal computing that defined more than a decade of digital experience with the notion of “mobile first” and VC firms that only invested in mobile, or designers who were “mobile designers.” We are entering the age of mixed reality or spatial computing.

With innovations like autonomous vehicles that you do not need to drive or that can drive you, blockchain and cryptocurrency, I believe augmented reality will be the interface to all of this.

AR will be the interface to everything that you cannot see. Anything that lacks a dedicated physical interface such as autonomous vehicles, deliveries, service process, blockchain and crypto-currency. AR will also replace many digital interfaces.

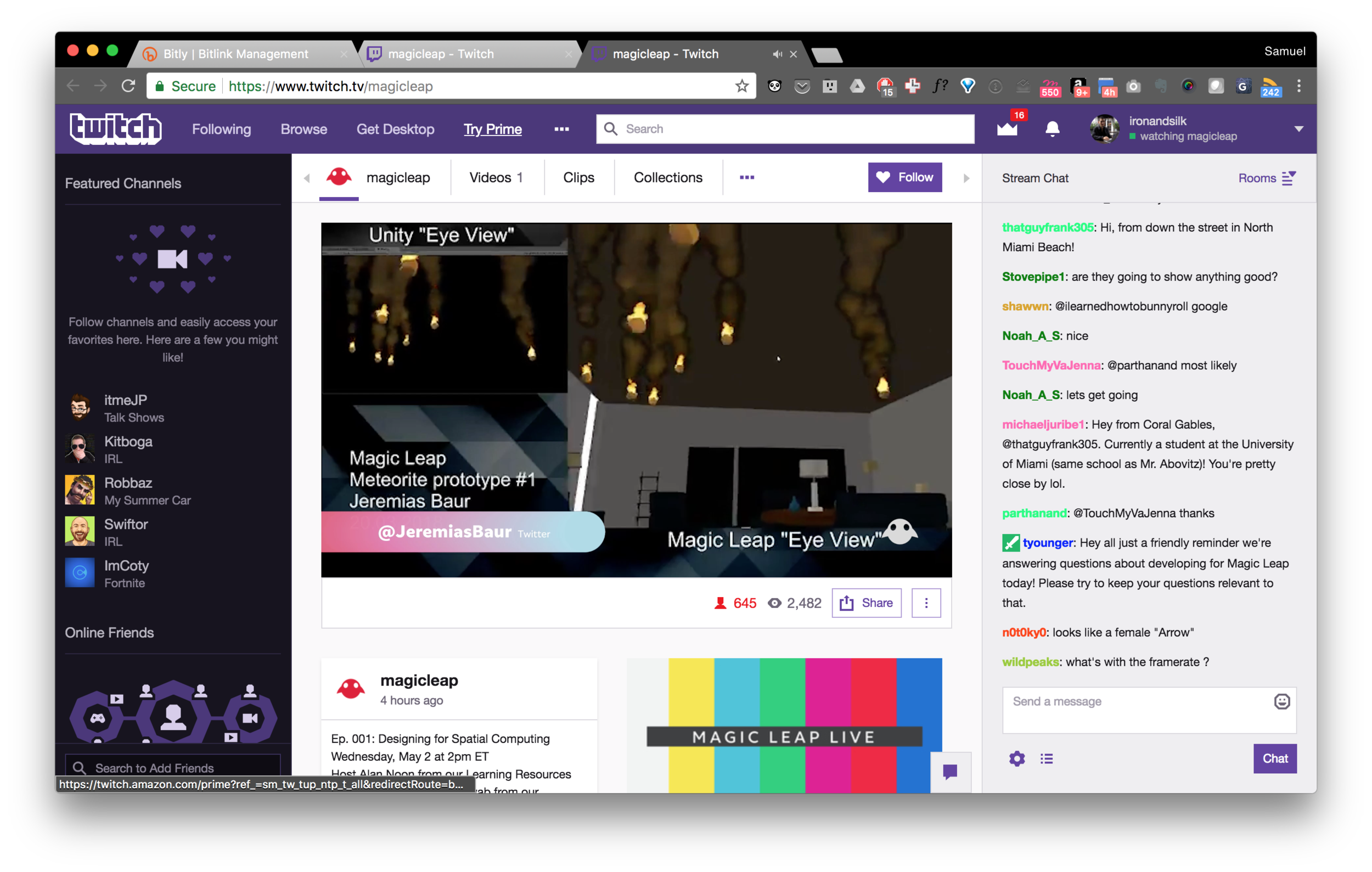

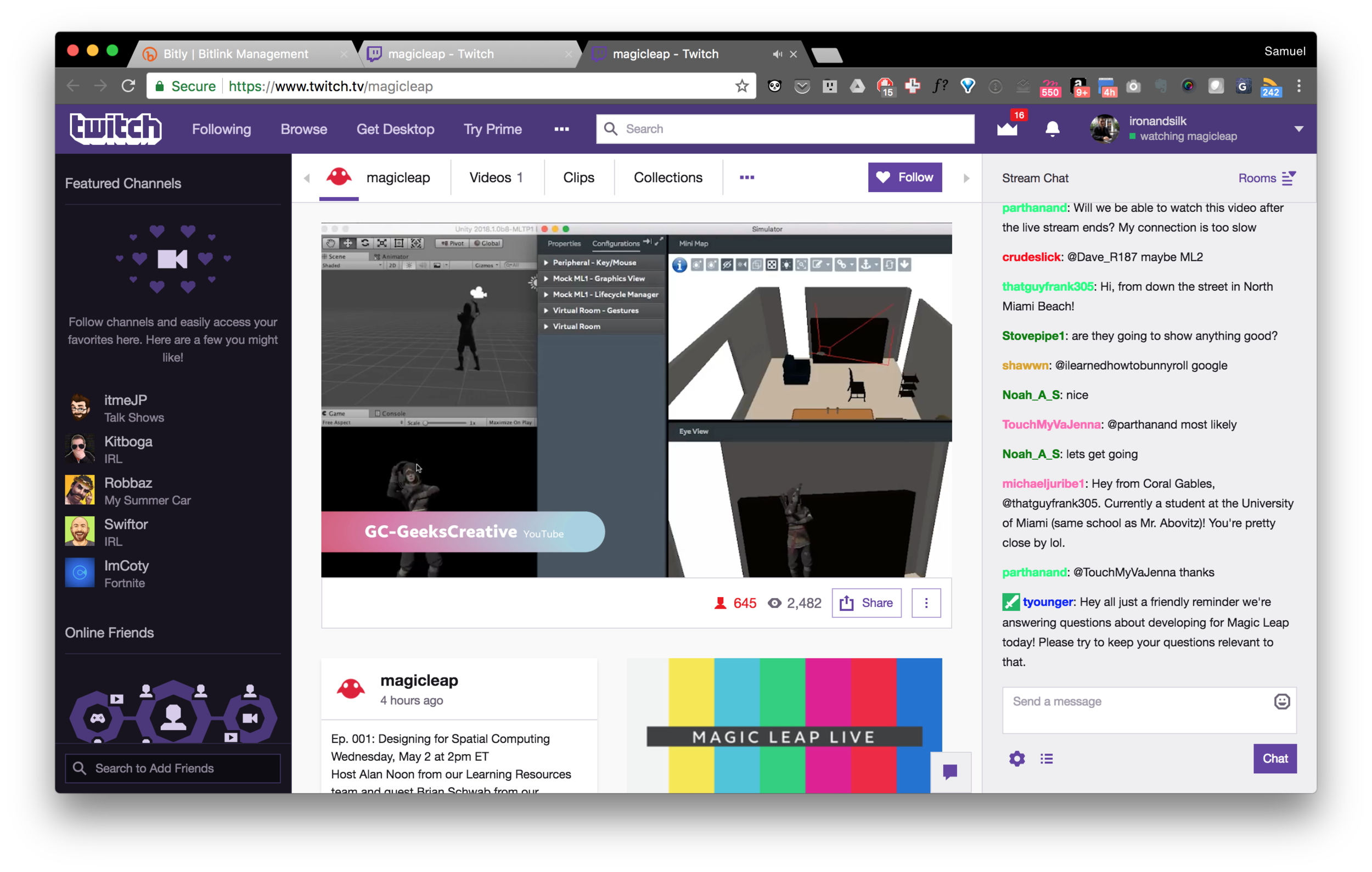

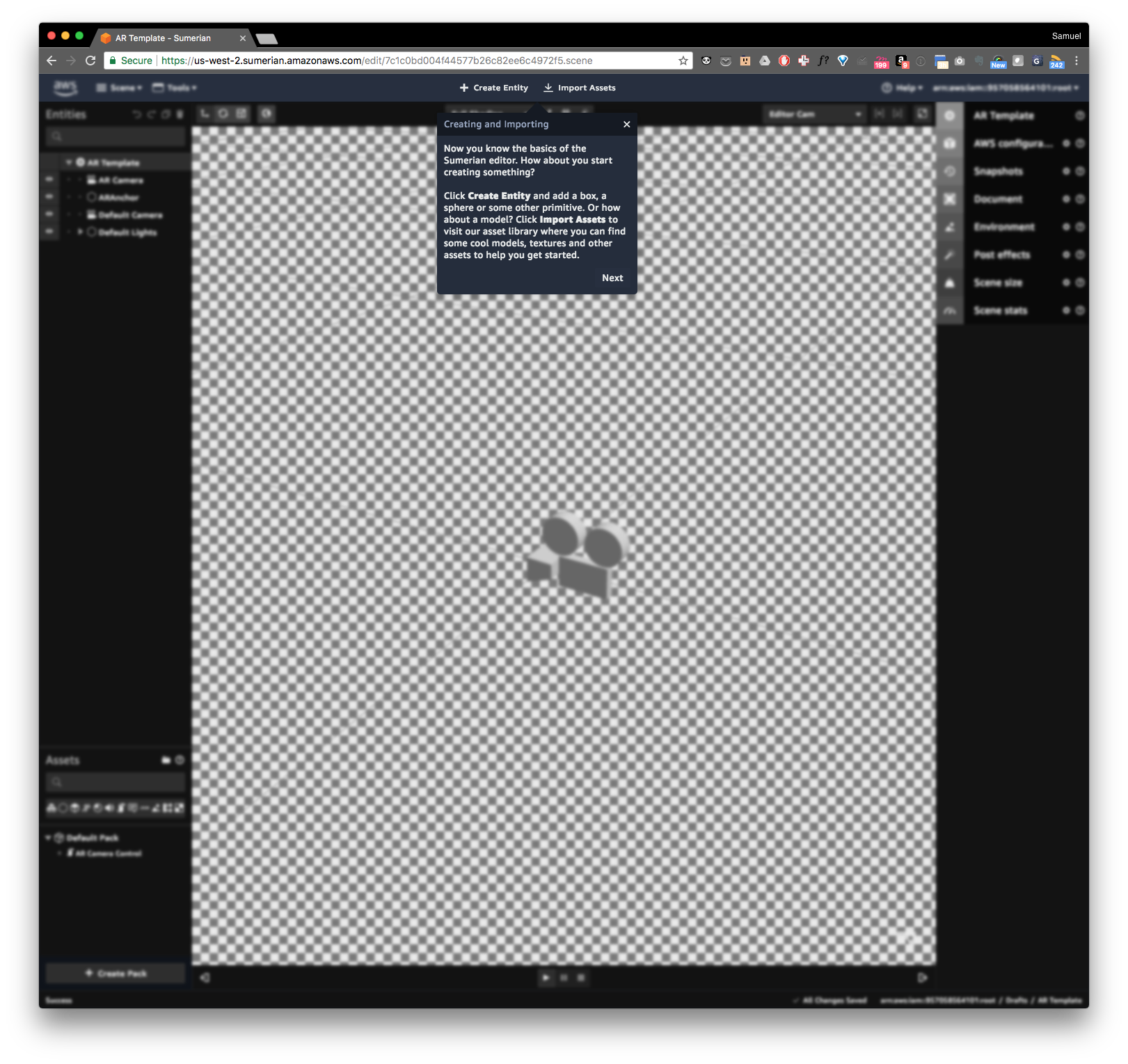

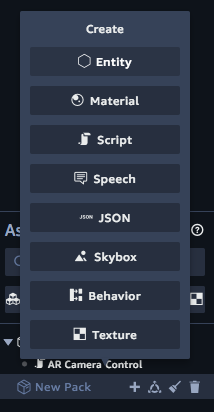

As we enter an age of spatial computing, or designing across space agencies, brands and designers are asking “How to we design for virtual and augmented reality?” When informed that AR experiences are made in a game development engines - this often misunderstood term confuses people, leading them to believe that gaming is the only application. Or that the end goal is only for entertainment’s sake and that Augmented Reality cannot provide real business value. These game development engines with extra heavy interfaces can intimidate many designers, or the simple notion of an integrated development environment where you design and code scares away designer and new creative adopters.

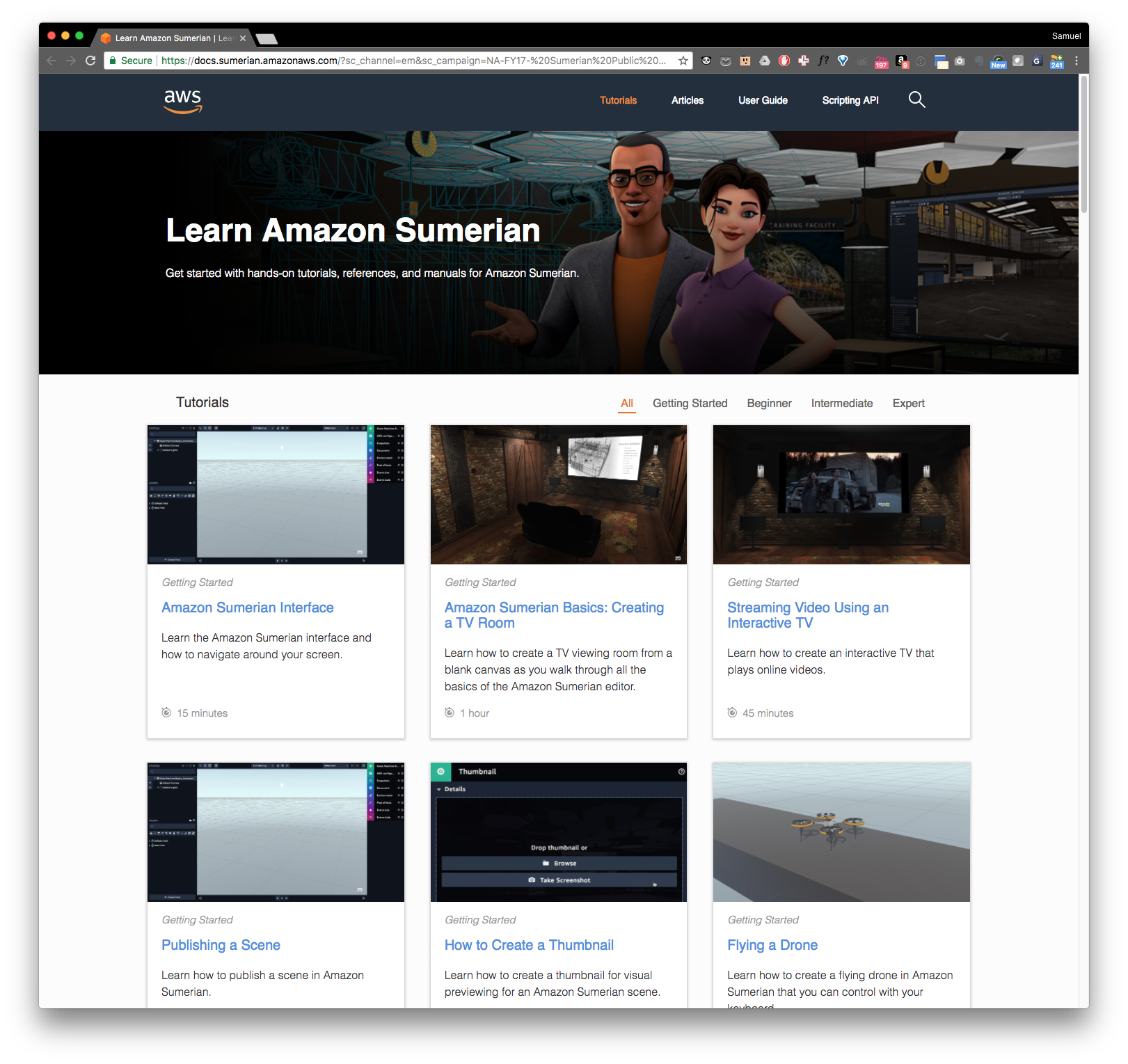

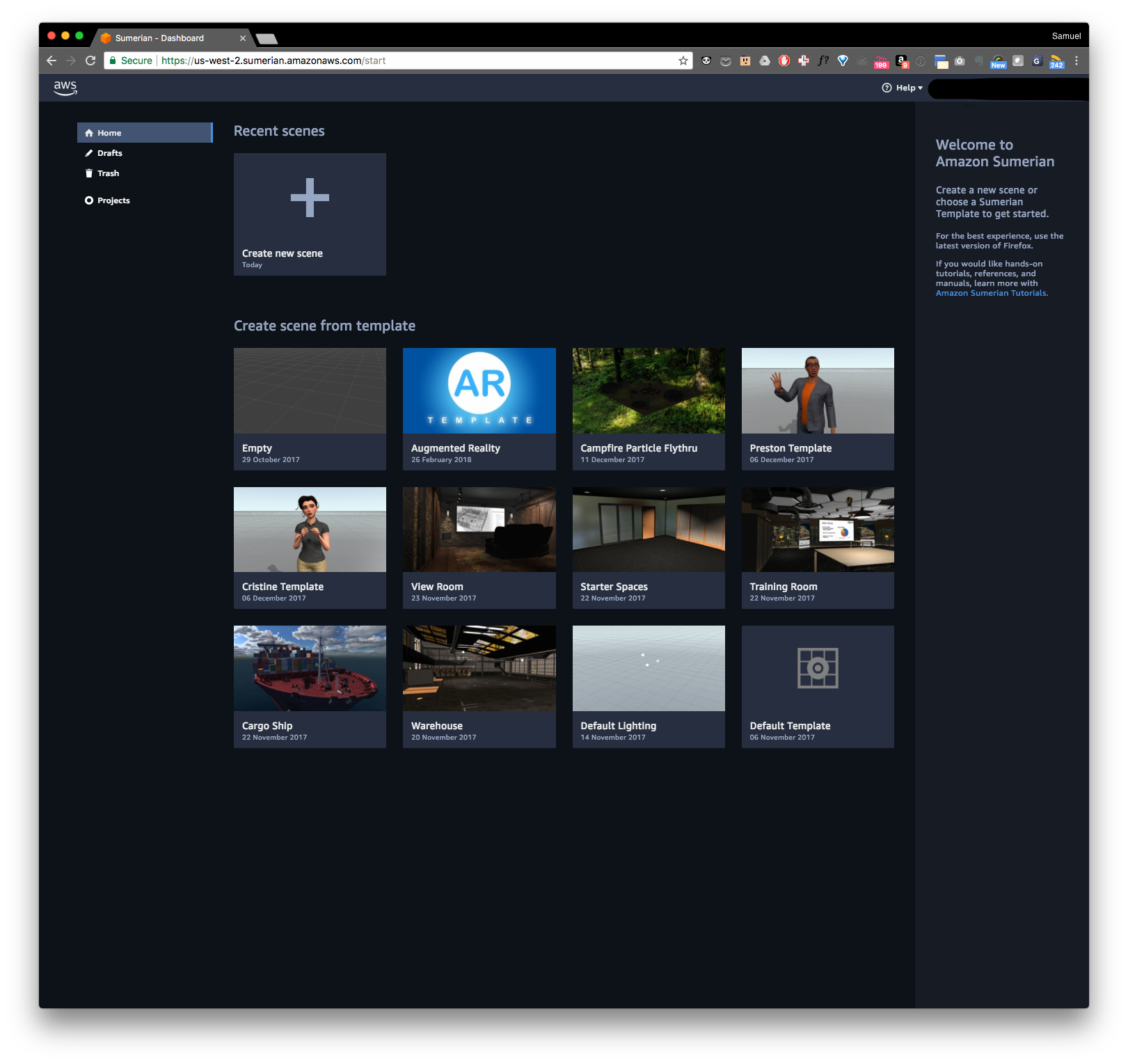

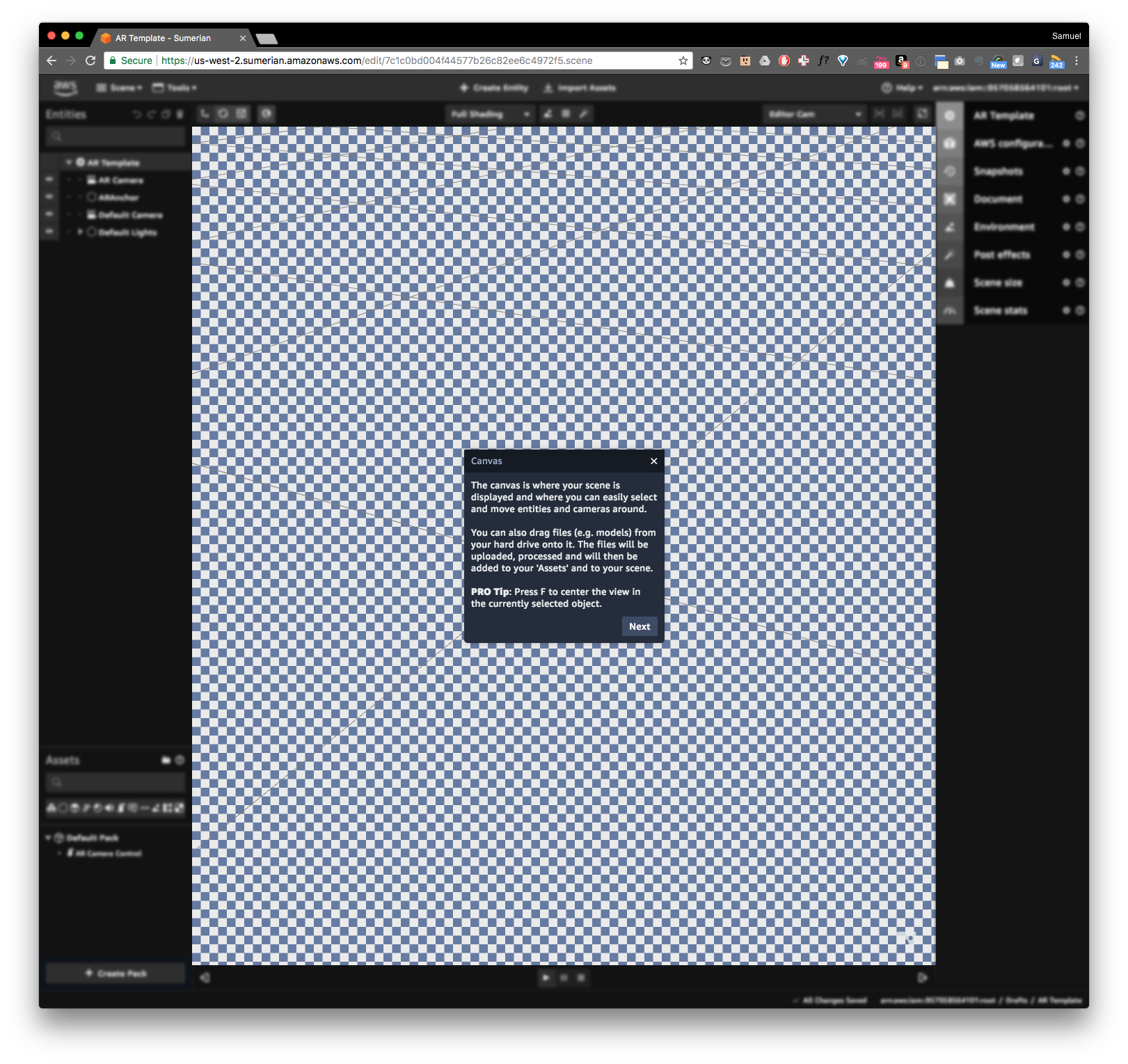

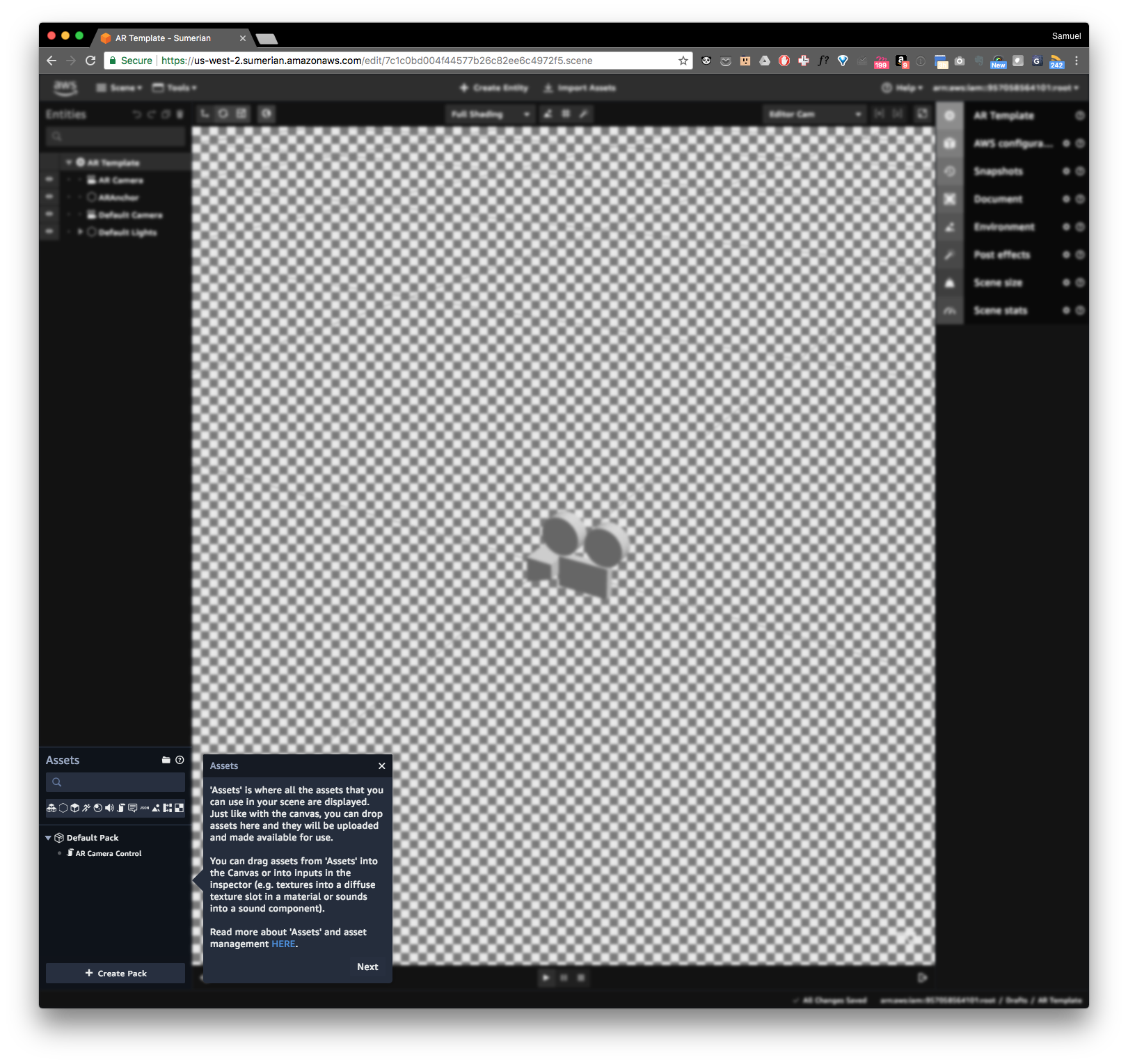

A few select companies are bridging the skill, knowledge and tool gap that currently separates designing and developing 3D experiences. By creating prototyping tools for VR & AR, these companies are bringing these frontier technologies closer to fruition through their creator tools. Currently there is a battle between 2D design and prototyping tools as the phases between design and prototyping merge and the iterations become quicker for more agile processes. If you are a digital product designer, you are well aware of this battle and keeping track of which tools are better bets to master for your process, teams, and assets. If you are a Venture Capitalist with bets on frontier technologies, you have looked at the tool layer of augmented and virtual realities, because you understand it is the basis of creating experiences and content for reality. And you also understand that this frontier is rapidly approaching. These AR prototyping tools not only bring this frontier closer, they are empowering designers to make things beyond the screen.

What are these companies? Where are they founded, how did they start? How do they bring us as creators closer to this future where frontier technologies are no longer experimental but are instrumental in solving problems and visualizing what is currently invisible? I will cover that in a series of posts covering the main prototyping tools, a process of design for development, informations architecture for virtual and augmented experiences.

Stay tuned.